Stay Integrated

How data collection will save your life

Examining advanced driver assistance system data collection for the future of vehicle engineering

By Joelle Beuzit

What is ADAS? What does the acronym stand for? ADAS means advanced driver assistance systems. It is a generic denomination to designate all past, current, and future electronically controlled, advanced safety systems in a car. It includes, but is not limited to, automated emergency braking systems (AEBS), lane-keeping assistance systems (LKA), adaptive cruise control (ACC), blind-spot monitoring (BSM), etc.

Behind the acronyms hides essential technology that saves lives. Human error causes most road accidents, so driver assistance systems were developed to enhance vehicle systems for safe and comfortable driving. Based on the information captured by the sensors around the car, the assistance system will warn the driver of a critical driving condition. The lane-keeping assistance system, for example, uses front cameras to detect the presence and trajectory of the driving lanes. If the driver veers unexpectedly off the lane, the system issues sensory warnings to the driver, with a short beep, a warning light, and a sensation of resistance in the steering wheel. Based on the level of automation of the car, the assistance system might even initiate the braking or escaping maneuver, for driver and vehicle protection.

How assistance systems work

Vehicles that feature advanced driver assistance systems are fitted with many sensors. These various sensors, short and long-range radars, low or high-resolution cameras, or 3-D laser scanning sensors (lidars), gather the information on its operational environment. The vehicle’s electronic control unit (ECU) uses this information to meet an appropriate driving decision, such as issuing a warning, slowing down, or applying the brakes.

However, real-life driving conditions are rarely simple. Take, for example, pedestrian detection systems which are designed to avoid a potentially fatal collision between cars and pedestrians on the roads. The American Automotive Association performed a study in October 2019 which warned vehicle owners to not completely rely on their collision avoidance systems, and to be an engaged and alert driver regardless of these safety features. While most systems detected the presence of an adult crossing the streets, the vast majority failed to avoid a collision with a child darting out. Worse, all systems failed to properly detect pedestrians at night.

So, why do these systems fail so easily? From the perspective of a computer, determining what a pedestrian looks like can be a challenging task. Human beings present a wide variety of features. An anti-collision system must be able to identify any pedestrian crossing the road, adults and children alike, even a person in a wheelchair. Equally, the system should not apply the brakes when spotting the image of a running child featured on a digital billboard. It must be smart enough to identify real driving hazards and be able to isolate them from non-threatening situations.

Using real-life data to validate the systems and train the algorithms

Beyond the major accidents that hit the newspapers headlines, users of partially autonomous vehicles often report minor systems failure on the internet. The more advanced the system, the more they are prone to fail, often for lack of properly understanding the signals collected by the sensors. Recently, a Tesla driver shared a short video, in which the car’s autopilot misunderstands the red letters of a vertical banner display, a supermarket’s brand logo, and interprets the red letter “O” as a red light. The car was already parked and didn’t stop unexpectedly, but this small error could have resulted in an accident in regular traffic.

Of course, the human brain wouldn’t make this error. But how can engineers prevent the artificial intelligence of a computer from misinterpreting the signs it comes across? The increasing levels of automation require increasing amounts of data to train the AI algorithms and to identify critical scenarios that happen on the road for systems safety performance testing. The data can be generated synthetically, through simulation environments in Simcenter, or using real-life recorded data.

Testing and validating the systems using real-life data is essential. To validate the system and to train the algorithms, ADAS data collection is an essential step for the development of self-driving cars.

By driving millions of miles and coming across a myriad of different complex driving scenarios, vehicles equipped with ADAS sensors collect the terabytes of data that will be used to train the driving automation algorithms. This data feed the algorithms with real-world information. And helps them understand how a red O differs from a red light. Additionally, the collected ADAS data is an indispensable element for the testing and validation of the vehicle's ADAS systems.

But collecting ADAS sensor data isn’t a straightforward task. What would be the consequences of feeding the algorithms with incorrect, incomplete, or corrupt data? Worse, would you trust a system that’s validated by flawed data?

The complexity of collecting ADAS sensor data

Data acquisition and processing have already been part of a vehicle development process for many years, so why is it different with ADAS sensor data? Firstly, this data needs to be raw. Only authentic, uncompressed data will render a genuine vision of a complex driving situation. This raw data is essential to the training of the algorithms.

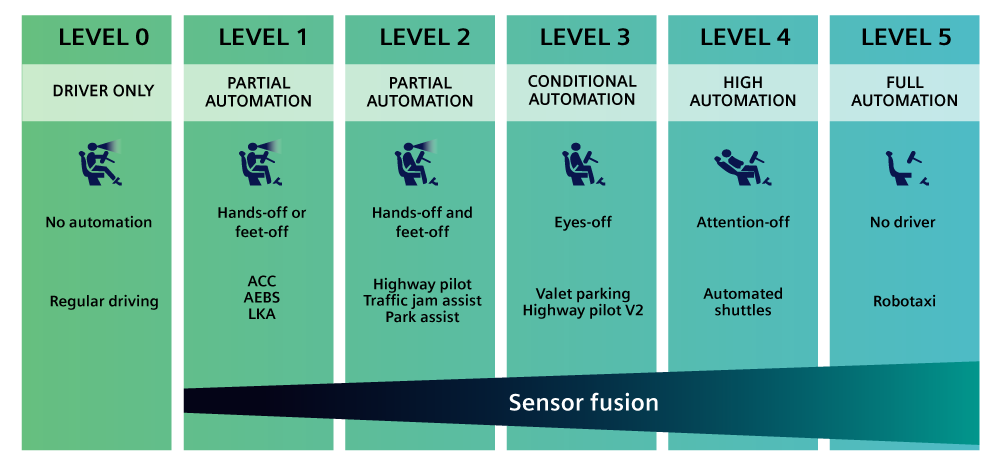

Secondly, as the driver assistance systems evolve towards a higher degree of automation, the number of car sensors and of corresponding data increases exponentially. The society of automotive engineers (SAE) defines 6 levels of driving automation, ranging from 0 (fully manual) to 5 (fully autonomous). The chart below illustrates these levels. Many vehicles driving on the road today feature level 2 partial driving automation, but car manufacturers plan to introduce higher levels of driving automation in near future. These systems will require more sensors, and more data to operate.

Thirdly, all this raw data streaming from various sensors must be perfectly synchronized. The slightest delay could result in delivering the wrong information about a driving situation, which could lead to a fatal collision. To prevent this incident, engineers need to quickly and accurately timestamp the data, as close as possible to the sensors themselves.

So, how is this achieved? To accelerate the development of multi-sensor autonomous driving and advanced driver assistance systems, automotive need to rely on a trusted ADAS data collection solution. A complete system that records, stores, analyzes and replays vast amounts of raw data.

Capture raw ADAS data at ultra-high speed with Simcenter SCAPTOR

With the introduction of Simcenter SCAPTOR, Siemens is the first company in the world that combines a physics-based sensor simulation platform with a raw sensor data acquisition solution in a single portfolio, leveraging simulation to enrich recorded data sets.

Simcenter makes it possible to record these massive data streams, store them and upload them onto a cloud or local cluster storage solution. It allows the replay of recorded sensor data in a time-synchronized way, to enable Hardware-in-the-Loop and Software-in-the-Loop testing. This makes it possible to run tests in a laboratory environment. The tests are repeatable, efficient, and less costly than testing on real roads. A thorough and well-documented development process is also critical. It ensures the required levels of safety and to demonstrate the work done in case of liability claims. A model-based system engineering workflow and toolchain support that. For autonomous vehicles and ADAS, a major part of the process is validation and verification data, which is drastically increasing. Most important is the traceability between requirements, test cases, test execution and test results supported by documentation. Siemens’ Xcelerator portfolio of integrated technologies is now further extended with traceability towards recorded data for automated driving technology.